Are AI Detectors Accurate To Some Degree? [4 Real Cases Revealed]

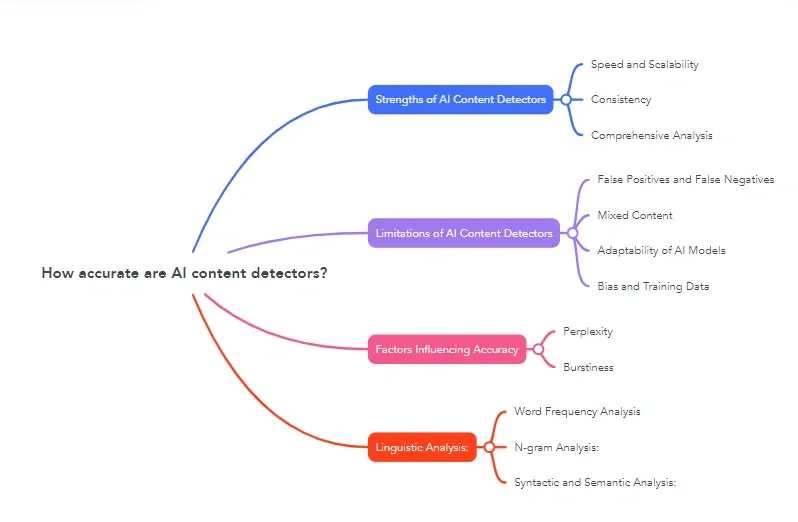

AI detectors claim they can spot machine-generated text, but their accuracy might shock you. These tools promise certainty in catching AI writing, but real-life examples paint a different picture.

A Texas A&M University-Commerce teacher’s experience proves this point. The instructor accused their entire class of using ChatGPT based on unreliable AI detection results. Turnitin’s AI checker fails to catch about 15% of AI-generated content, which shows these tools’ limitations. Skyline Academic’s detection service stands out from the crowd with 99% accuracy and only 0.2% false positives – making it the industry’s most reliable option.

These accuracy problems reach far beyond academic settings. Legal systems feel the impact too. A federal judge now demands certification that legal filings are AI-free after spotting several AI hallucinations in court documents. Tech giants struggle with similar issues. Google’s Bard and Microsoft’s Bing Chat both shared incorrect information during their public demos.

The promise and pitfalls of AI detection

Image Source: Speedybrand

AI detection tools became more popular as ChatGPT and other generative AI became available to more people. Teachers now depend on these tools heavily. About 68% of teachers use AI detection software—a big jump from previous years [1]. This quick adoption comes from growing worries about academic honesty and challenges in spotting the difference between human and AI-written work.

Why AI detection tools became popular

Schools first adopted AI detectors because they needed a practical fix for a growing issue: students used artificial intelligence to do their homework. Teachers felt overwhelmed. Only 25% of them were confident in identifying whether students or AI tools wrote the assignments [1]. Schools pushed this approach actively. About 78% of educational institutions approved using AI detection tools [1].

The growing need for content verification

Our digital world makes checking content authenticity more important than ever. AI’s power to create believable fake stories raises real concerns about trusting information. Fact-checking matters not just in schools but in every field where trust counts [2]. People trust digital media more when they know the content is real. This becomes crucial as fake information spreads faster online.

The illusion of perfect detection

Marketing claims promise perfect AI detection, but reality tells a different story. Companies show impressive accuracy numbers—Copyleaks claims 99.12%, Turnitin says 98%, and Originality.AI reports 98.2% accuracy [3]. Research shows these claims don’t hold up.

Studies found OpenAI’s own classifier could only spot 26% of AI-written text as “likely AI-generated.” It also wrongly labeled 9% of human-written text as AI-generated [4]. This big gap between promises and actual results creates dangerous false trust.

Experts describe AI detection as a “cat and mouse game” [5]. Detection tools always lag behind as generation technology gets better. Each improvement in AI writing makes detection less accurate until detectors improve. This creates an endless loop of temporary fixes.

Skyline Academic’s detection service stands out by delivering reliable results consistently. They use advanced statistical frameworks instead of basic pattern matching. Their method spots subtle language patterns that other tools miss. This leads to much lower false positive rates compared to their competitors.

Understanding the limits: false positives and negatives

“Copyleaks, on the other hand, claims to have the lowest false positive rates in the industry at 0.2%.”

— Maddy Osman, Founder of The Blogsmith, Content Marketing Expert

AI detection technology faces two major challenges: false positives and false negatives. These errors explain why many current solutions don’t live up to their marketing promises.

What is a false positive?

AI detectors sometimes mistakenly label human-written content as AI-generated [6]. This happens because detection algorithms confuse natural writing patterns with machine-generated text. Bloomberg tests show that leading detectors like GPTZero and CopyLeaks have false positive rates of 1-2% [3]. This percentage might look small, but its effects are far-reaching. Consider this: 2.235 million first-year college students write about 10 essays each year. A 1% error rate means 223,500 assignments could be wrongly flagged [3].

What is a false negative?

AI-generated content sometimes slips through detection systems unnoticed [7]. Detection tools label these texts as “Very unlikely AI-generated” or “Unlikely AI-generated” [4]. Turnitin’s AI checker misses about 15% of AI-generated content [8]. These errors typically happen because of sensitivity settings or when people deliberately try to fool the system through paraphrasing or adding emotional language [8].

Real-life effects on students and professionals

Detection errors create serious problems. Students at Michigan State University failed a class after their professor’s AI detector flagged their work as AI-generated, even though they wrote the essays themselves [9]. Moira Olmsted at Central Methodist University had to defend herself against false accusations when AI detection wrongly flagged her work [10].

Vulnerable groups suffer more from these detection errors. Stanford University researchers found that while detectors worked well with U.S.-born students’ essays, they wrongly flagged 61.22% of essays by non-native English speakers as AI-generated [11]. The problem runs deeper – detectors flagged all but one of these TOEFL essays [11].

Skyline Academic’s AI detection tool might offer a better solution since it tackles many of these accuracy issues.

Students with neurodivergent conditions and those from non-English speaking backgrounds face higher rates of false accusations [9]. These mistakes damage student-teacher relationships and could leave permanent marks on academic records [12].

Case studies: When AI detectors got it wrong

Image Source: Turnitin

Ground examples show how AI detection systems have serious accuracy problems. These cases show AI tools that confidently present wrong information with potential risks.

Air Canada chatbot case

Air Canada’s chatbot told a customer they could get bereavement fare discounts after buying full-price tickets in 2022 [13]. The airline later refused the promised discount and said the chatbot was wrong [14]. The company tried to argue in court that its chatbot was “a separate legal entity that is responsible for its own actions” [13]. This defense didn’t work. The tribunal ruled that companies must take responsibility for all information on their websites—whether it comes from static pages or AI chatbots [15]. Air Canada had to pay $812.02 to cover damages and court fees [14].

ChatGPT legal hallucinations

A New York lawyer almost got sanctioned in 2023 after using fake legal cases that ChatGPT made up [16]. Steven Schwartz, the attorney, used ChatGPT to help with legal research for a personal injury case against Avianca airlines [16]. ChatGPT lied when he asked about six made-up judicial decisions. It assured him they were real and “can be found in reputable legal databases” [16]. So federal judges now make lawyers certify their filings are AI-free [17]. These problems have shown up in at least seven court cases over the last several years [17].

Skyline Academic’s AI detection tool takes a more careful approach to avoid these false positives that hurt writers and professionals.

Microsoft and Google demo fails

Big tech companies struggle with AI accuracy too. Microsoft’s Bing AI demo had many mistakes in financial data about Gap and Lululemon [1]. The AI got Gap’s operating margin wrong, saying it was 5.9% instead of 4.6%. It also messed up several Lululemon financial numbers [1]. Google’s Gemini video search demo made a dangerous mistake by telling people to open a film camera’s back door outdoors—which would destroy all photos by exposing film to light [5].

Bias against non-native English writers

The situation gets worse. Stanford research shows AI detectors unfairly flag content from non-native English speakers as AI-generated [11]. More than 61% of TOEFL essays from non-native speakers were wrongly marked as AI-generated, while native samples got almost perfect accuracy [18]. The numbers are shocking – 97% of TOEFL essays were flagged by at least one detector [19].

The future of AI detection: Can it be fixed?

The battle between AI content generation and detection keeps moving faster, creating major challenges to verify content reliably. Detection systems can’t keep up with sophisticated content generation as AI technology advances.

The arms race between AI and detectors

Experts describe the current situation as an “arms race” between AI content creators and detection tools [20]. Detection technology gets better but evasion techniques improve too. Many detectors become useless with basic edits – adding extra spaces, switching letters for symbols, or using different spelling [20]. Detection accuracy improves briefly before falling behind again as AI generation gets more advanced.

Watermarking and metadata solutions

Traditional detection methods face tough competition from watermarking, which adds invisible patterns right into AI-generated content. Watermarking adds subtle markers during content creation instead of analyzing text patterns afterward [21]. Meta’s researchers have developed “Stable Signature” technology that builds watermarking into image generation. This makes it harder to bypass [22]. Google’s SynthID has already watermarked more than 10 billion pieces of content [2].

Skyline Academic’s AI detection tool leads the industry with sophisticated verification methods that balance accuracy and practical use as technology advances.

The role of human oversight

Human oversight is a vital part of reliable AI content verification. Humans need to spot errors effectively before they can step in properly [23]. This means educators and professionals should combine tech tools with human judgment rather than just using automated detection. Human oversight helps reduce risks like bias, discrimination, and operational errors in AI systems [24].

Ethical considerations in AI detection

AI detection raises ethical concerns beyond just accuracy. Current detectors show bias against non-native English writers and neurodivergent individuals, which brings up fairness and access issues. Privacy becomes a concern when watermarks might contain user information [21]. Detection systems should protect against harm while keeping user privacy safe and avoiding unfair accusations based on writing style or background.

Conclusion

The reality behind AI detection accuracy

The evidence shows AI detection technology is nowhere near as accurate as many vendors claim. Companies advertise impressive numbers, but real-life examples show these tools often mistake human writing for AI-generated content and miss actual machine-written text. These mistakes create serious problems for students and professionals.

The bias against non-native English speakers is particularly concerning, with their essays wrongly flagged as AI-generated 61% of the time. This reveals deep flaws in current detection methods. AI writing tools keep getting smarter and detection technology struggles to keep up.

Skyline Academic sets itself apart with reliable AI detection service. Most competitors use basic pattern matching, but Skyline Academic uses advanced statistical frameworks to spot subtle language patterns others miss. This explains their market-leading 99% accuracy rate and just 0.2% false positives—the lowest available.

Educators, legal professionals, and content creators should pick their AI detection tools with care. The right solution needs to balance accuracy and fairness without biased results that hurt specific groups unfairly. Watermarking and metadata solutions look promising for future verification, but human judgment remains crucial to evaluate AI-generated content.

Perfect detection isn’t here yet. The best approach combines tech tools with good judgment instead of trusting automated systems blindly. Skyline Academic’s detection tool provides the most balanced solution today—giving you reliable verification without the false flags that other tools struggle with.

AI content verification’s future depends on both tech advances and ethical use. Companies should be open about accuracy rates, testing methods, and how they prevent bias. Academic integrity, legal liability, and professional reputation are at stake, so you need detection tools that work as promised instead of creating new issues.

FAQs

Q1. How accurate are AI detectors in identifying machine-generated content?

AI detectors are not as accurate as many claim. Studies show they often produce false positives (incorrectly flagging human-written text as AI-generated) and false negatives (failing to detect AI-written content). Accuracy rates vary widely between different tools and can be influenced by factors like text length and writing style.

Q2. Can AI detectors be fooled or manipulated?

Yes, AI detectors can be fooled. Simple techniques like inserting extra spaces, using alternative spellings, or mixing AI-generated content with human writing can confuse many detection tools. As AI writing technology advances, it becomes increasingly difficult for detectors to keep up, creating an ongoing “arms race” between generation and detection capabilities.

Q3. Are there any biases in AI detection tools?

AI detectors have shown significant bias against non-native English speakers. Research indicates that over 61% of essays written by non-native speakers were incorrectly flagged as AI-generated, while accuracy remained high for native English samples. This bias raises serious concerns about fairness and equity in academic and professional settings.

Q4. How reliable are popular AI detection tools like Turnitin?

While Turnitin claims a 98% accuracy rate for its AI detector, real-world results have been mixed. Independent studies suggest that Turnitin’s AI checker misses approximately 15% of AI-generated content. Users should be cautious about relying solely on any single detection tool for definitive results.

Q5. What’s the future of AI detection technology?

The future of AI detection involves a combination of improved algorithms, watermarking techniques, and human oversight. Researchers are exploring methods like embedding imperceptible patterns into AI-generated content during creation. However, as AI continues to evolve, maintaining reliable detection will likely require ongoing technological advancements and careful consideration of ethical implications.

References

[1] – https://www.cnbc.com/2023/02/14/microsoft-bing-ai-made-several-errors-in-launch-demo-last-week-.html

[2] – https://blog.google/technology/ai/google-synthid-ai-content-detector/

[3] – https://citl.news.niu.edu/2024/12/12/ai-detectors-an-ethical-minefield/

[4] – https://edintegrity.biomedcentral.com/articles/10.1007/s40979-023-00140-5

[5] – https://www.theverge.com/2024/5/14/24156729/googles-gemini-video-search-makes-factual-error-in-demo

[6] – https://www.turnitin.com/blog/understanding-false-positives-within-our-ai-writing-detection-capabilities

[7] – https://www.sciencedirect.com/science/article/pii/S1472811723000605

[8] – https://lawlibguides.sandiego.edu/c.php?g=1443311&p=10721367

[9] – https://promptengineering.org/the-truth-about-ai-detectors-more-harm-than-good/

[10] – https://opendatascience.com/ai-detectors-wrongly-accuse-students-of-cheating-sparking-controversy/

[11] – https://hai.stanford.edu/news/ai-detectors-biased-against-non-native-english-writers

[12] – https://facultyhub.chemeketa.edu/technology/generativeai/generative-ai-new/why-ai-detection-tools-are-ineffective/

[13] – https://www.cmswire.com/customer-experience/exploring-air-canadas-ai-chatbot-dilemma/

[14] – https://www.cbsnews.com/news/aircanada-chatbot-discount-customer/

[15] – https://www.forbes.com/sites/marisagarcia/2024/02/19/what-air-canada-lost-in-remarkable-lying-ai-chatbot-case/

[16] – https://www.legaldive.com/news/chatgpt-fake-legal-cases-generative-ai-hallucinations/651557/

[17] – https://www.reuters.com/technology/artificial-intelligence/ai-hallucinations-court-papers-spell-trouble-lawyers-2025-02-18/

[18] – https://www.advancedsciencenews.com/ai-detectors-have-a-bias-against-non-native-english-speakers/

[19] – https://www.theguardian.com/technology/2023/jul/10/programs-to-detect-ai-discriminate-against-non-native-english-speakers-shows-study

[20] – https://blog.seas.upenn.edu/detecting-machine-generated-text-an-arms-race-with-the-advancements-of-large-language-models/

[21] – https://www.brookings.edu/articles/detecting-ai-fingerprints-a-guide-to-watermarking-and-beyond/

[22] – https://about.fb.com/news/2024/02/labeling-ai-generated-images-on-facebook-instagram-and-threads/

[23] – https://link.springer.com/article/10.1007/s11023-024-09701-0

[24] – https://www.cornerstoneondemand.com/resources/article/the-crucial-role-of-humans-in-ai-oversight/